|

I am a PhD student in Mechanical Engineering at Biomimetics & Dexterous Manipulation Laboratory, advised by Prof. Mark Cutkosky. I also have the privilege of collaborating with Prof. Oussama Khatib in the Stanford Robotics Center. I was a MSc student in Stanford Vision and Learning Lab, working with Prof. Jiajun Wu and Prof. Fei-Fei Li. I previously received my dual B.S. in Mechanical Engineering from Shanghai Jiao Tong University and Purdue University, where I was fortunate to be advised by Prof. Karthik Ramani on Human-Computer Interaction (Fun fact: Prof. Ramani was a Ph.D. student of Mark’s!). I support diversity, equity, and inclusion. If you would like to have a chat with me regrading research, career plans or anything, feel free to reach out! I would be happy to support people from underrepresented groups in the STEM research community, and hope my expertise can help you.

Email: hao.li [@] cs.stanford.edu

Resume / Google Scholar / Twitter / GitHub |

|

|

|

I have been working on design, fabricate, and understand tactile sensors and the rich information brought by them. I'm also broadly interested in AI and robotics, including but not limited to perception, planning, control, hardware design, and human-centered AI. The goal of my research is to build agents that can achieve human-level of learning and adapt to novel and challenging scenarios by leveraging multisensory information including vision, audio, touch, etc. |

|

Yingke Wang, Hao Li, Yifeng Zhu, Koven Yu, Ken Goldberg, Li Fei-Fei, Jiajun Wu, Yunzhu Li, Ruohan Zhang Under Review project page / paper We present IMPASTO, a robotic oil-painting system that integrates learned pixel dynamics models with model-based planning for high-fidelity reproduction of oil paintings. |

|

|

Chengyi Xing*, Hao Li*, Yi-Lin Wei, Tian-Ao Ren, Tianyu Tu, Yuhao Lin, Elizabeth Schumann, Wei-Shi Zheng, Mark Cutkosky Intelligent Robots and Systems (IROS), Accepted project page / arXiv We present the design of FBG-based wearable tactile sensors capable of transferring tactile data collected by human hands to robotic hands. |

|

|

Hao Li*, Chengyi Xing*, Saad Khan, Miaoya Zhong, Mark Cutkosky Robotics and Automation Letters (RA-L) project page / arXiv / code We present the design of underwater whisker sensors with a sim-to-real learning framework for contact tracking. |

|

|

Michael A. Lin, Hao Li, Chengyi Xing, Mark Cutkosky project page / arXiv / video / code We present a method for using passive whiskers to gather sensory data as brushing past objects during normal robot motion. |

|

|

Ruohan Gao*, Yiming Dou*, Hao Li*, Tanmay Agarwal, Jeannette Bohg, Yunzhu Li, Li Fei-Fei, Jiajun Wu Computer Vision and Pattern Recognition (CVPR), 2023 dataset demo / project page / arXiv / video / code We introduce a benchmark suite of 10 tasks for multisensory object-centric learning, and a dataset, in- cluding the multisensory measurements for 100 real-world household objects. |

|

|

Ruohan Gao*, Hao Li*, Gokul Dharan, Zhuzhu Wang, Chengshu Li, Fei Xia, Silvio Savarese, Li Fei-Fei, Jiajun Wu International Conference on Robotics and Automation (ICRA), 2023 project page / arXiv / video / code We introduce a multisensory simulation platform with integrated audio-visual simulation for training household agents that can both see and hear. |

|

|

Hao Li*, Yizhi Zhang*, Junzhe Zhu, Shaoxiong Wang, Michelle A. Lee, Huazhe Xu, Edward Adelson, Li Fei-Fei, Ruohan Gao†, Jiajun Wu† Conference on Robot Learning (CoRL), 2022 project page / arXiv / video / code We build a robot system that can see with a camera, hear with a contact microphone, and feel with a vision-based tactile sensor. |

|

Yingke Wang, Hao Li, Yifeng Zhu, Koven Yu, Ken Goldberg, Li Fei-Fei, Jiajun Wu, Yunzhu Li, Ruohan Zhang Under Review project page / paper We present IMPASTO, a robotic oil-painting system that integrates learned pixel dynamics models with model-based planning for high-fidelity reproduction of oil paintings. |

|

|

Yuhao Lin*, Yi-Lin Wei*, Haoran Liao, Mu Lin, Chengyi Xing, Hao Li, Dandan Zhang, Mark Cutkosky, Wei-Shi Zheng Conference on Robot Learning (CoRL), Accepted project page / arXiv We propose TypeTele, a type-guided dexterous teleoperation system, which enables dexterous hands to perform actions that are not constrained by human motion patterns by introducing dexterous manipulation types into the teleoperation system. |

|

|

Rachel Thomasson, Alessandra Bernardini, Hao Li, Chengyi Xing, Amar Hajj-Ahmad, Mark Cutkosky Robotics and Automation Letters (RA-L) project page / code We introduce SLIM, a symmetric, low-inertia robotic manipulator with a bidirectional hand and integrated wrist designed for safe and effective operation in constrained, contact-rich environments. |

|

|

Chengyi Xing*, Hao Li*, Yi-Lin Wei, Tian-Ao Ren, Tianyu Tu, Yuhao Lin, Elizabeth Schumann, Wei-Shi Zheng, Mark Cutkosky Intelligent Robots and Systems (IROS), Accepted project page / arXiv We present the design of FBG-based wearable tactile sensors capable of transferring tactile data collected by human hands to robotic hands. |

|

|

Hao Li*, Chengyi Xing*, Saad Khan, Miaoya Zhong, Mark Cutkosky Robotics and Automation Letters (RA-L) project page / arXiv / code We present the design of underwater whisker sensors with a sim-to-real learning framework for contact tracking. |

|

|

Michael A. Lin, Hao Li, Chengyi Xing, Mark Cutkosky project page / arXiv / video / code We present a method for using passive whiskers to gather sensory data as brushing past objects during normal robot motion. |

|

|

Yi-Lin Wei, Jian-Jian Jiang, Chengyi Xing, Xian-Tuo Tan, Xiao-Ming Wu, Hao Li, Mark Cutkosky Wei-Shi Zheng Conference on Neural Information Processing Systems (NeurIPS), 2024 project page / arXiv / code We explores a novel task DexGYS, enabling robots to perform dexterous grasping based on human commands expressed in natural language. |

|

|

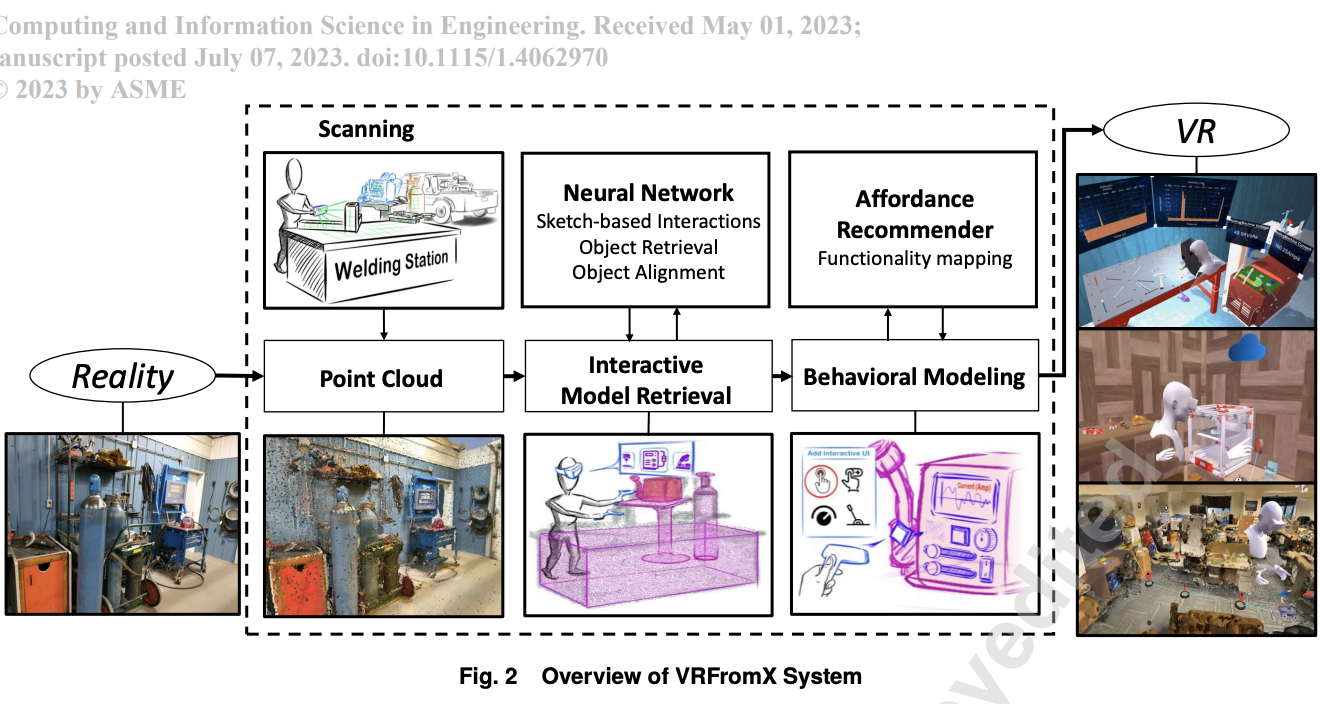

Ananya Ipsita*, Runlin Duan*, Hao Li*, Subramanian Chidambaram, Yuanzhi Cao, Min Liu, Alexander J Quinn, Karthik Ramani Journal of Computing and Information Science in Engineering (JCISE) arXiv Using our VRFromX system, we performed a usability evaluation with 20 DUs from which 12 were novices in VR programming with a welding use case. |

|

Ruohan Gao*, Yiming Dou*, Hao Li*, Tanmay Agarwal, Jeannette Bohg, Yunzhu Li, Li Fei-Fei, Jiajun Wu Computer Vision and Pattern Recognition (CVPR), 2023 dataset demo / project page / arXiv / video / code We introduce a benchmark suite of 10 tasks for multisensory object-centric learning, and a dataset, in- cluding the multisensory measurements for 100 real-world household objects. |

|

|

Ruohan Gao*, Hao Li*, Gokul Dharan, Zhuzhu Wang, Chengshu Li, Fei Xia, Silvio Savarese, Li Fei-Fei, Jiajun Wu International Conference on Robotics and Automation (ICRA), 2023 project page / arXiv / video / code We introduce a multisensory simulation platform with integrated audio-visual simulation for training household agents that can both see and hear. |

|

|

Hao Li*, Yizhi Zhang*, Junzhe Zhu, Shaoxiong Wang, Michelle A. Lee, Huazhe Xu, Edward Adelson, Li Fei-Fei, Ruohan Gao†, Jiajun Wu† Conference on Robot Learning (CoRL), 2022 project page / arXiv / video / code We build a robot system that can see with a camera, hear with a contact microphone, and feel with a vision-based tactile sensor. |

|

|

Ananya Ipsita, Hao Li, Runlin Duan, Yuanzhi Cao, Subramanian Chidambaram, Min Liu, Karthik Ramani Conference on Human Factors in Computing Systems (CHI), 2021 project page / arXiv / video We build a system that allows users to select region(s) of interest (ROI) in scanned point cloud or sketch in mid-air to enable interactive VR experience. |

|

Reviewer for CVPR, CoRL, ICLR, ICRA, RAL, CHI, HRI, ICCV, CogSci Organizer of ENGR319: Stanford Robotics Seminar, Stanford University, 2024 - Present |

|

Course Assistant in AA274A: Principle of Robot Autonomy, Stanford University, 2022 Course Assistant in CS231N: Deep Learning for Computer Vision, Stanford University, 2023 |

|

|